AI (Artificial Intelligence) is the ability of a machine to perform cognitive functions as humans do, such as perceiving, learning, reasoning and solving problems. The benchmark for AI is the human level concerning in teams of reasoning, speech, and vision.

Narrow AI: A artificial intelligence is said to be narrow when the machine can perform a specific task better than a human. The current research of AI is here now.

General AI: An artificial intelligence reaches the general state when it can perform any intellectual task with the same accuracy level as a human would

Strong AI: An AI is strong when it can beat humans in many tasks.

In 1956, a group of experts from different backgrounds decided to organize a summer research project on AI.

The primary purpose of the research project was to tackle "every aspect of learning or any other feature of intelligence that can in principle be so precisely described, that a machine can be made to simulate it."

The proposal of the summits included

Automatic Computers

How Can a Computer Be Programmed to Use a Language?

Neuron Nets

Self-improvement

It led to the idea that intelligent computers can be created. A new era began, full of hope - Artificial intelligence.

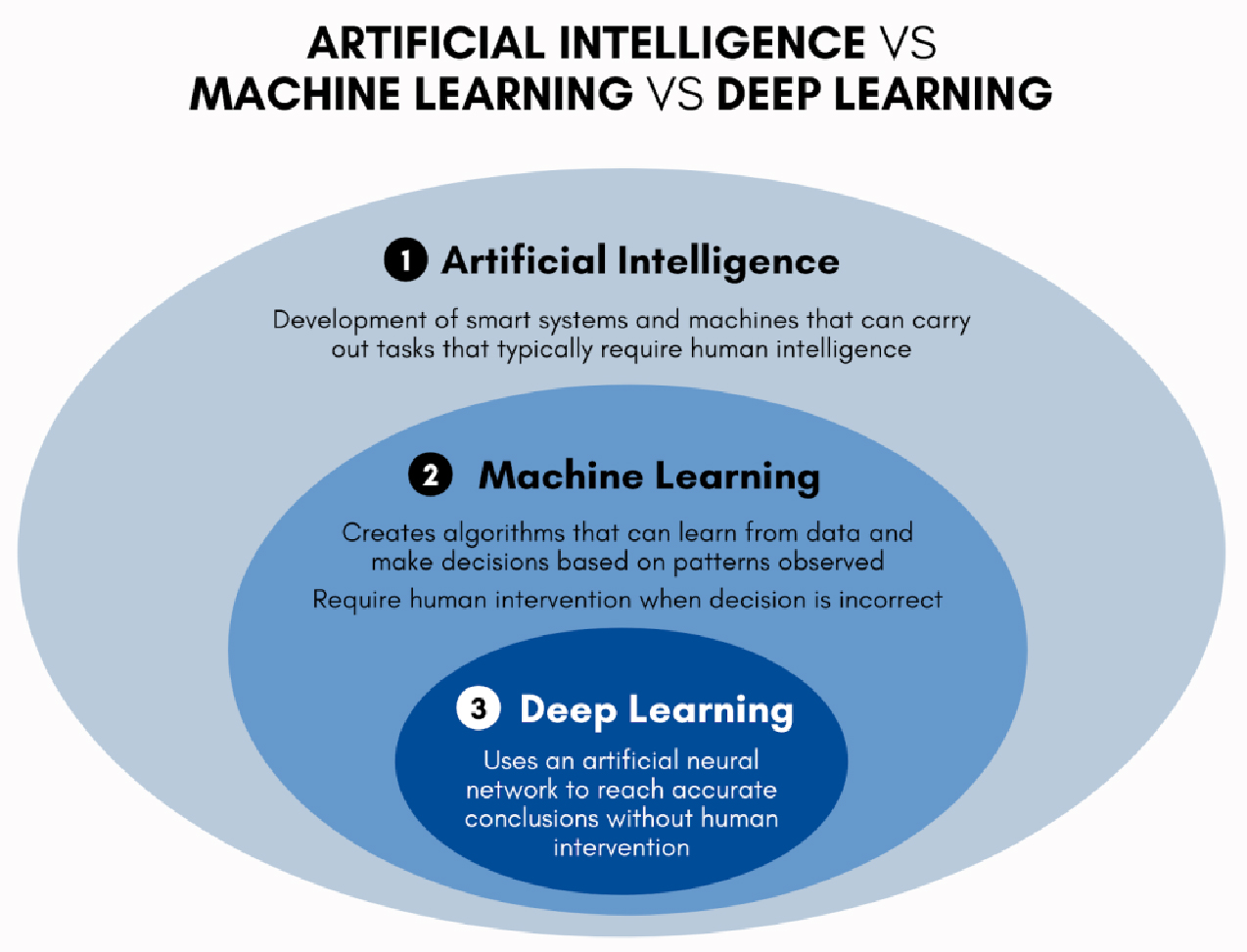

Artificial intelligence can be divided into three subfields:

It is the study of how to train the computers so that computers can do things which at present human can do better.

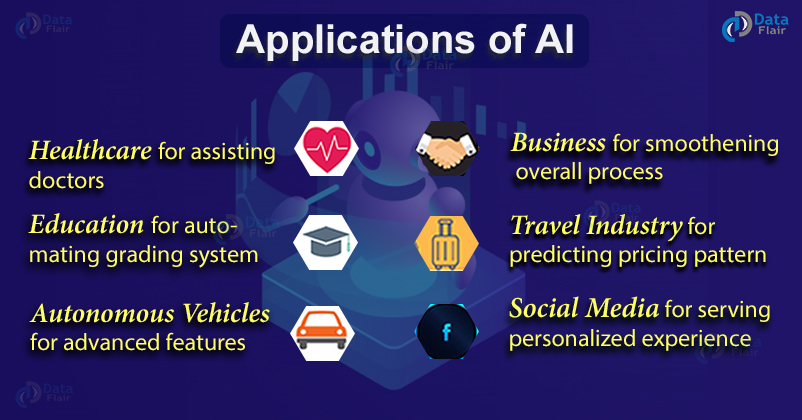

AI is wrapped around mimicking human decision making processes and performing intellectual tasks in a human-like way. Tasks include:

It can refer to anything from a computer program playing a game of chess, to a voice-recognition system like Apple’s Siri, to self driving cars, to robots offering concierge services in a Japanese bank.

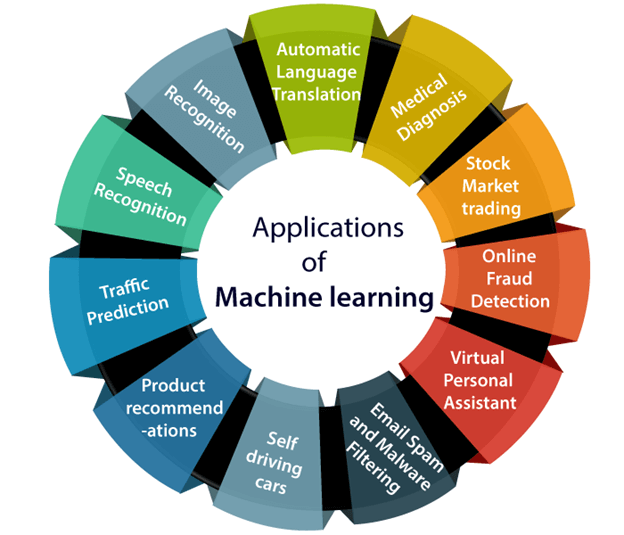

Machine Learning is the learning in which machine can learn by its own without being explicitly programmed. It is an application of AI that provides system the ability to automatically learn and improve from experience. Here we can generate a program by integrating input and output of that program.

The key concept here is that machines ingest large datasets, “learn” for themselves and when exposed to new data they can make decisions. Simply put: Unlike hand-coding a computer program with specific instructions to complete a task, ML allows the program to learn to recognize patterns on its own and make predictions.

A few real life examples are:

Google Gmail’s Smart Reply: responds to emails on your behalf by suggesting varied responses.

Google Maps: suggests the fastest routes to reduce travelling time, by analysing the speed of the traffic with the help of location data.

Paypal: uses ML algorithms on customer data to fight against fraud.

Netflix: has launched a TV series suggestion engine which powers intelligent entertainment.

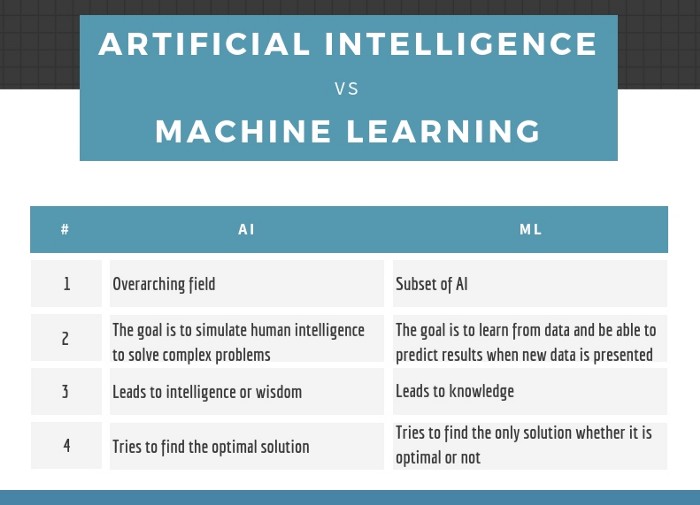

AI is a broader concept than ML, which addresses the use of computers to mimic the cognitive functions of humans.

When machines carry out tasks based on algorithms in an “intelligent” manner, that is AI.

vs.

ML is a subset of AI and focuses on the ability of machines to receive a set of data and evolve as they learn more about the information they are processing.

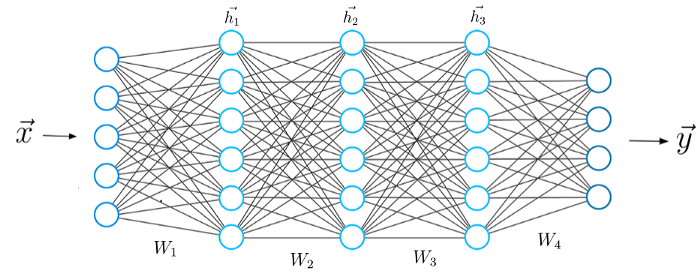

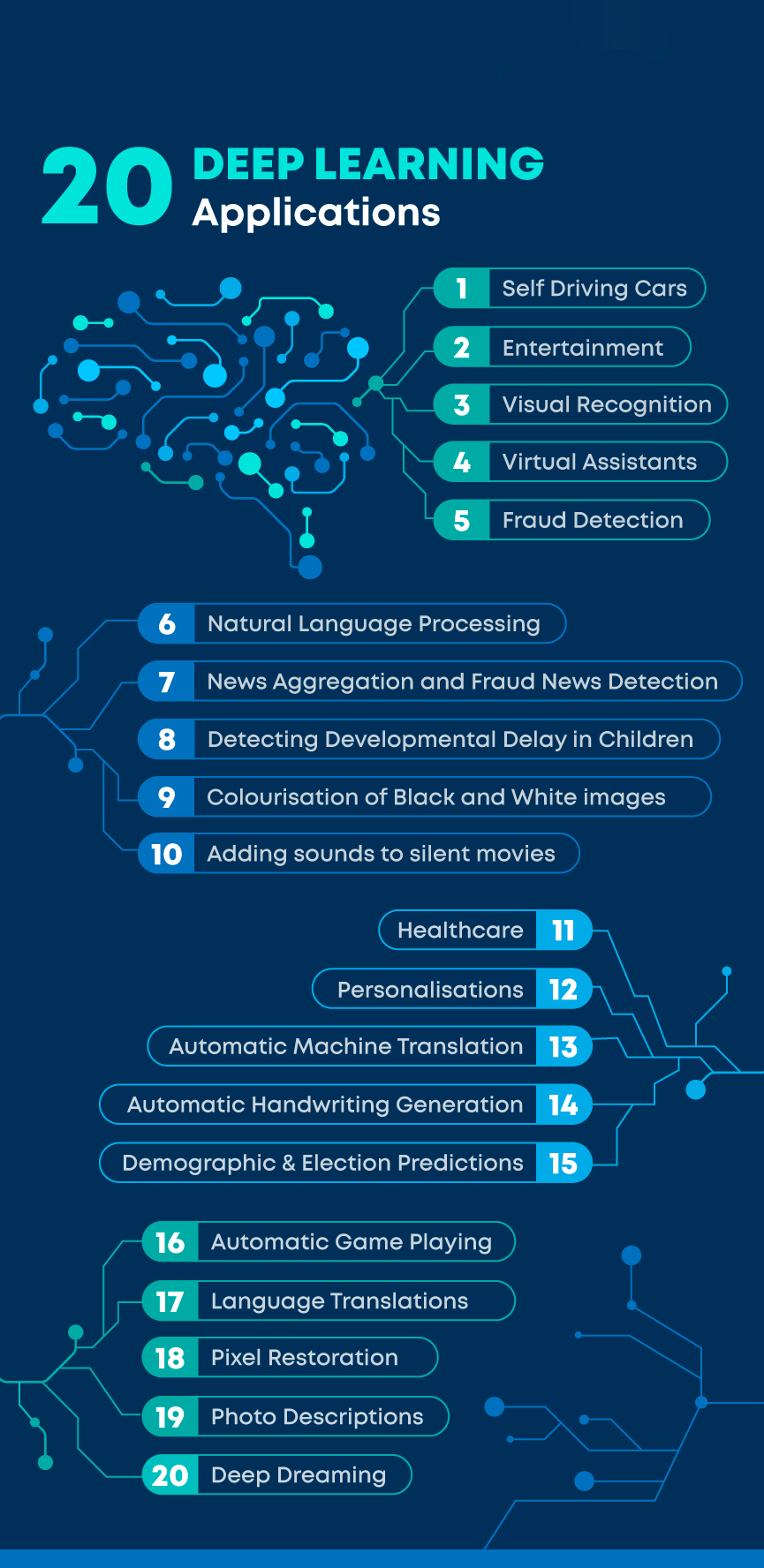

Deep Learning is a subset of Machine Learning, which on the other hand is a subset of Artificial Intelligence.

Deep Learning is inspired by the structure of a human brain. Deep learning algorithms attempt to draw similar conclusions as humans would by continually analyzing data with a given logical structure. To achieve this, deep learning uses a multi-layered structure of algorithms called neural networks.

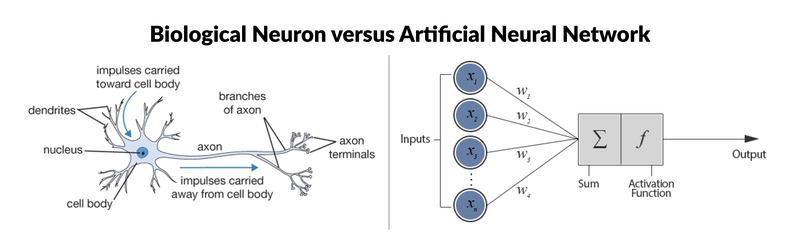

Artificial neural network (ANN)

Artificial neural network refers to a system or an algorithm used in deep learning that mimics how the human brain’s neural circuits function, such as when making sense of things and events.

|

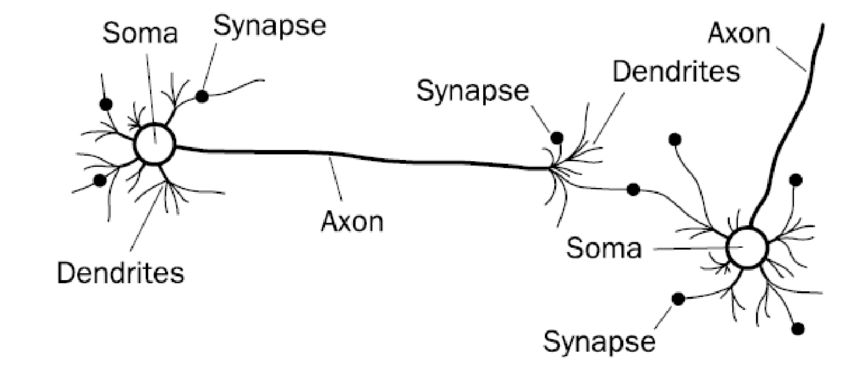

Biological Neural Network The neuron is the basic working unit of the brain, a specialized cell designed to transmit information to other nerve cells, muscle, or gland cells. Neurons are cells within the nervous system that transmit information to other nerve cells, muscle, or gland cells. Most neurons have a cell body, an axon, and dendrites. A biological neural network/circuit is a population of neurons interconnected by synapses to carry out a specific function when activated. Neural circuits interconnect to one another to form large scale brain networks. Biological neural networks have inspired the design of artificial neural networks, but artificial neural networks are usually not strict copies of their biological counterparts. |

An Artificial neural network is an architecture where the layers are stacked on top of each other. In deep learning, the learning phase is done through a neural network.

Thus, Deep learning does not mean the machine learns more in-depth knowledge; it means the machine uses different layers to learn from the data. The depth of the model is represented by the number of layers in the model. For instance, Google LeNet model for image recognition counts 22 layers.

|

S.No. |

Machine Learning |

Deep Learning |

|

1. |

Machine Learning is a superset of Deep Learning |

Deep Learning is a subset of Machine Learning |

|

2. |

The data represented in Machine Learning is quite different as compared to Deep Learning as it uses structured data |

The data representation is used in Deep Learning is quite different as it uses neural networks(ANN). |

|

3. |

Machine Learning is an evolution of AI |

Deep Learning is an evolution to Machine Learning. Basically it is how deep is the machine learning. |

|

4. |

Machine learning consists of thousands of data points. |

Big Data: Millions of data points. |

|

5. |

Outputs: Numerical Value, like classification of score |

Anything from numerical values to free-form elements, such as free text and sound. |

|

6. |

Uses various types of automated algorithms that turn to model functions and predict future action from data. |

Uses neural network that passes data through processing layers to the interpret data features and relations. |

|

7. |

Algorithms are detected by data analysts to examine specific variables in data sets. |

Algorithms are largely self-depicted on data analysis once they’re put into production. |

|

8. |

Machine Learning is highly used to stay in the competition and learn new things. |

Deep Learning solves complex machine learning issues. |

|

CONCERNS |

Human agency: |

Decision-making on key aspects of digital life is automatically ceded to code-driven, "black box" tools. People lack input and do not learn the context about how the tools work. They sacrifice independence, privacy and power over choice; they have no control over these processes. This effect will deepen as automated systems become more prevalent and complex. |

|

Data abuse: |

Most AI tools are and will be in the hands of companies striving for profits or governments striving for power. Values and ethics are often not baked into the digital systems making people's decisions for them. These systems are globally networked and not easy to regulate or rein in. |

|

|

Job loss: |

The efficiencies and other economic advantages of code-based machine intelligence will continue to disrupt all aspects of human work. While some expect new jobs will emerge, others worry about massive job losses, widening economic divides and social upheavals, including populist uprisings. |

|

|

Dependence lock-in: |

Many see AI as augmenting human capacities but some predict the opposite - that people's deepening dependence on machine-driven networks will erode their abilities to think for themselves, take action independent of automated systems and interact effectively with others. |

|

|

Mayhem: |

Some predict further erosion of traditional sociopolitical structures and the possibility of great loss of lives due to accelerated growth of autonomous military applications and the use of weaponized information, lies and propaganda to dangerously destabilize human groups. Some also fear cybercriminals' reach into economic systems. |

|

|

SUGGESTED SOLUTIONS |

Global good is No. 1: |

Digital cooperation to serve humanity's best interests is the top priority. Ways must be found for people around the world to come to common understandings and agreements - to join forces to facilitate the innovation of widely accepted approaches aimed at tackling wicked problems and maintaining control over complex human-digital networks. |

|

Values-based system: |

Adopt a 'moonshot mentality' to build inclusive, decentralized intelligent digital networks 'imbued with empathy' that help humans aggressively ensure that technology meets social and ethical responsibilities. Some new level of regulatory and certification process will be necessary. |

|

|

Prioritize people: |

Reorganize economic and political systems toward the goal of expanding humans' capacities and capabilities in order to heighten human/AI collaboration and staunch trends that would compromise human relevance in the face of programmed intelligence. |

|

© 2024 iasgyan. All right reserved