Description

Copyright infringement not intended

Picture Courtesy: https://www.linkedin.com/pulse/european-union-20-million-euros-develop-on-demand-ai-platform-lopes

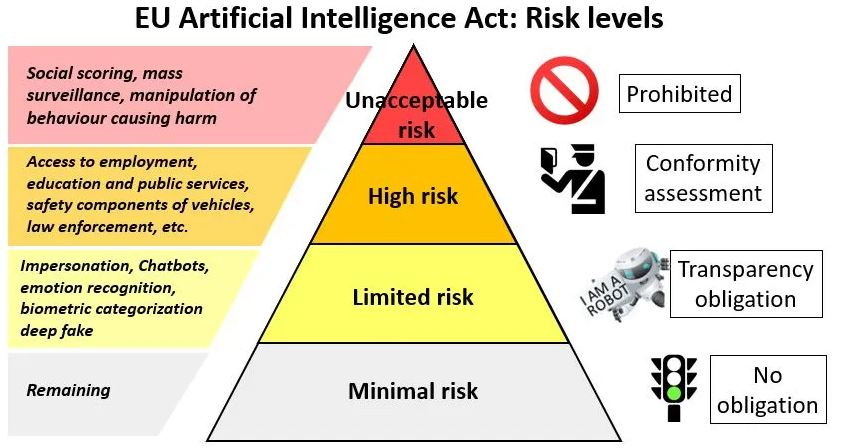

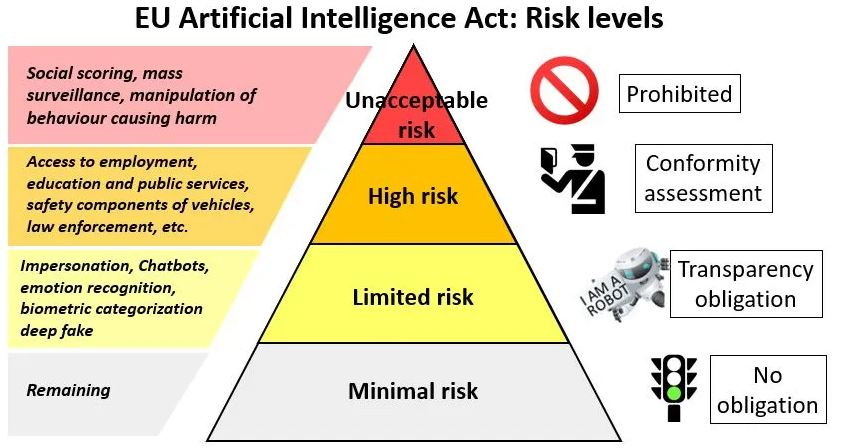

Context: The European Parliament recently passed the Artificial Intelligence Act to regulate Artificial Intelligence. This legislation is the first comprehensive framework for governing AI, and it is expected to have a global impact on similar laws in other nations.

Details

- The EU's AI Act has the potential to set a global standard for AI legislation. With its comprehensive approach that takes into account the various risks posed by different AI applications, the Act provides a template for other countries to adapt to their particular requirements.

- The clear categorization approach (prohibited, high-risk, limited-risk and minimal-risk) establishes a standard for evaluating AI development and deployment, maintaining uniformity across international standards.

Key Provisions of the EU AI Act

The EU AI Act takes a horizontal approach, which means it applies to all areas in which AI is utilised. It categorises AI systems into four risk categories, each with related regulatory requirements:

- Prohibited: Systems that breach fundamental rights or pose unacceptable dangers, such as social assessment, which generates biassed "risk profiles" of people based on their behaviour.

- High-risk: Systems that have a significant effect on people's lives and rights, such as those used for biometric identity, essential infrastructure, or educational, health, and law enforcement applications. These systems will have to meet strict safety, openness, and fairness standards. This could include human monitoring, strong security measures, and conformity evaluations to demonstrate that they satisfy EU standards.

- Limited-risk: Systems that require user involvement, such as chatbots or AI-powered recommendation systems. These systems must be transparent about their use of AI and allow users to opt out of interacting with them if desired.

- Minimal risk: Systems posing little or no risk, such as spam filters or smart appliances with limited AI capabilities. These systems are often free from particular AI rules, but should somehow follow existing laws, such as those governing consumer protection and product safety.

Significance

- The EU's AI Act establishes the first complete legal framework for global AI governance. This means it establishes a standard and provides a reference point for other countries as they design their own AI rules.

- The Act's significance is expected to be similar to that of the EU's General Data Protection Regulation (GDPR), which has had a significant impact on global data privacy rules.

- The AI Act is likely to drive a global re-evaluation of AI development and deployment policies, much as the GDPR compelled corporations to reconsider how they gather, keep, and utilise personal data. This may result in a more standardised approach to AI governance, boosting confidence and providing a level playing field for firms.

Challenges

- The history of EU laws, such as the GDPR (which has been criticised for restricting innovation), raises concerns about the EU AI Act. The GDPR imposed severe data collection and use regulations, which some claim have caused additional difficulties for organisations, particularly small and medium-sized enterprises (SMEs). The complexity of GDPR compliance has also been identified as a barrier to innovation, with companies unwilling to build new data-driven goods and services for fear of violating the rule. Similar worries exist for the AI Act.

- The Act's comprehensive categorization of AI systems, as well as its requirements for high-risk systems, may result in bureaucratic administrative stress that restricts innovation, particularly among smaller businesses and startups.

- The Act may fail to keep up with the rapidly growing nature of AI technology. Highly strict regulations may quickly become ineffective, limiting the development of good AI technologies.

|

AI Regulation in India

●The Union Ministry of Electronics and Information Technology (MeitY) is working on an AI framework.

●The MeitY requires platforms in India to get government permission before launching "under-testing/unreliable" AI tools like Large Language Models (LLMs) or Generative AI

●For India, developing a framework for responsible AI regulation is essential. Recent updates demonstrate a willingness to adapt legislation to the changing AI field. India must strike a balance between protecting citizens' rights and encouraging innovation in the AI field.

|

Conclusion

- The European Union's AI Act is a big step forward in AI regulation, establishing an example for other countries to follow. While obstacles exist in adopting and adjusting these policies, particularly in countries such as India, the creation of responsible AI frameworks is critical for assuring the ethical and productive use of AI technology.

Must Read Articles:

European Union’s Artificial Intelligence Act: https://www.iasgyan.in/daily-current-affairs/european-unions-artificial-intelligence-act#:~:text=The%20AI%20Act%20would%20help,threaten%20people%27s%20safety%20and%20rights.&text=By%20guaranteeing%20the%20safety%20and,of%20AI%20in%20the%20EU.

|

PRACTICE QUESTION

Q. A facial recognition system used for airport security flags a passenger as a potential security threat. However, upon investigation, it is discovered that the flag was triggered due to a bias in the training data – the system disproportionately identified people of a specific ethnicity as threats. In this scenario, which of the following actions would be MOST important to address the ethical concerns of AI bias?

A) Immediately remove the flagged passenger and increase security measures.

B) Fine-tune the facial recognition system with more balanced training data.

C) Develop a human oversight system to review all flagged individuals.

D) Alert the public about the limitations of the facial recognition system.

Answer: B

Explanation: While immediate security measures may be necessary (eliminating A), a long-term solution requires addressing the bias. Option B tackles the root cause. Option C is a good secondary measure, but doesn't address the bias itself (eliminating C). Raising public awareness (D) is valuable, but doesn't directly address the bias (eliminating D).

|