Description

Disclaimer: Copyright infringement not intended.

Context

- Large Language Models (LLMs) are foundational to the capabilities of modern AI chatbots like ChatGPT and Gemini.

- They empower these AI systems to engage in conversations with humans and predict subsequent words or sentences.

- LLMs represent a significant advancement in the field of artificial intelligence, enabling machines to learn, think, and communicate in a manner that was once limited to humans.

Details

Definition and Functionality

- LLMs are large, general-purpose language models capable of understanding and generating human-like text.

- LLMs serve as the backbone of generative AI, enabling machines to comprehend and produce human language across various industries and applications.

- Training Data: LLMs are trained on massive corpora of text data, often comprising billions or even trillions of words from diverse sources such as books, articles, websites, and other textual content available on the internet.

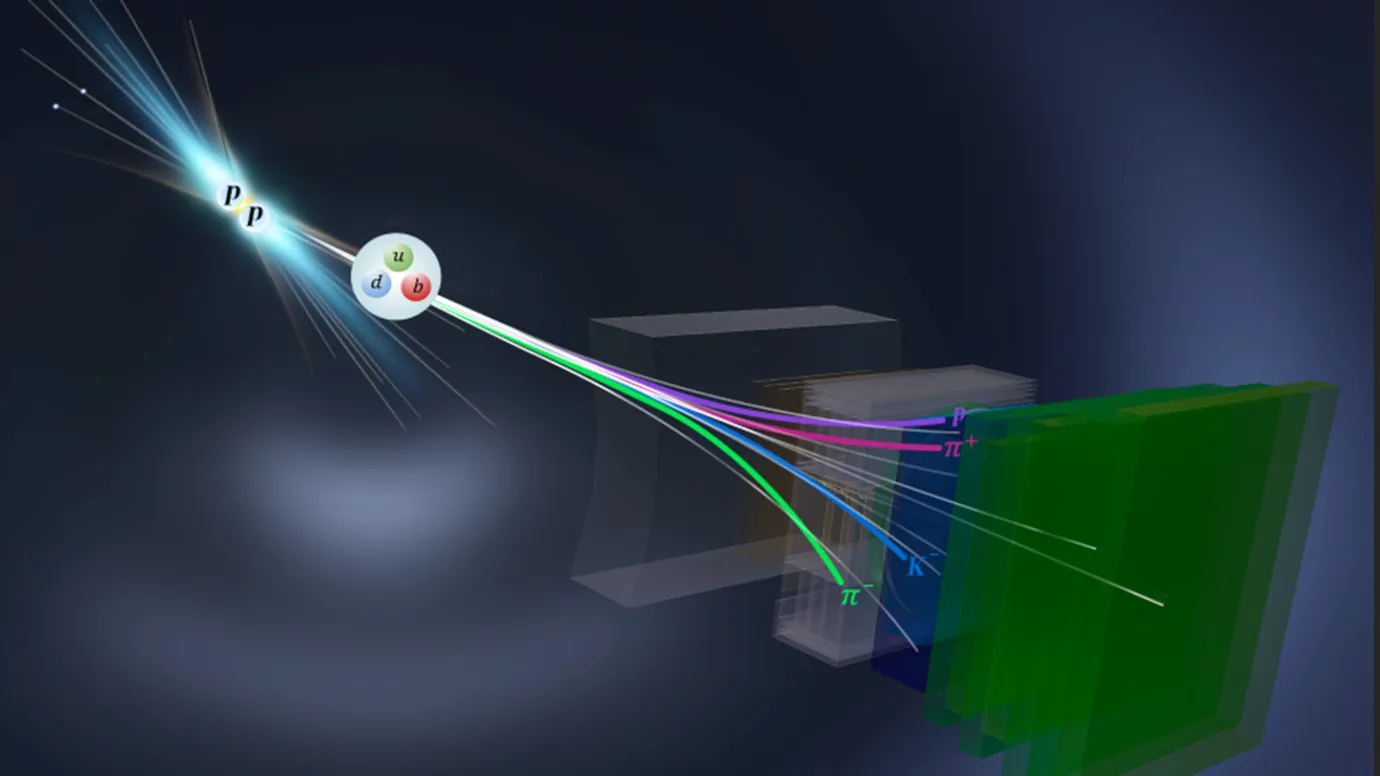

- Deep Learning Architecture: LLMs are typically built using deep learning architectures, such as recurrent neural networks (RNNs), convolutional neural networks (CNNs), or more commonly, transformer architectures like GPT (Generative Pre-trained Transformer) models.

Key Features

- Large Size: LLMs are characterized by their massive size, both in terms of training data and parameter count. They learn from extensive datasets, capturing patterns, structures, and relationships within language.

- General Purpose: LLMs are versatile and capable of addressing general language problems, irrespective of specific tasks or resource constraints.

- Autoregressive, Transformer-based, and Encoder-Decoder Architectures: LLMs utilize different architectures for language processing, including autoregressive models like GPT-3, transformer-based models like Gemini, and encoder-decoder models for translation tasks.

- Transformer Architecture: The Transformer architecture, introduced by the "Attention is All You Need" paper, forms the basis of many state-of-the-art LLMs. It consists of self-attention mechanisms that allow the model to weigh the importance of different words in a sentence when generating text.

Types of LLMs

- Based on Architecture: LLMs can be categorized into autoregressive, transformer-based, and encoder-decoder models, depending on their underlying neural network architecture.

- Based on Training Data: LLMs can be pre-trained and fine-tuned, multilingual, or domain-specific, catering to different use cases and applications.

- Based on Size and Availability: LLMs vary in size and availability, ranging from open-source models freely available to proprietary models with restricted access.

Working Mechanism

- LLMs employ deep learning techniques, training artificial neural networks to predict the probability of words or sequences given previous context.

- They analyze patterns and relationships within training data to generate coherent and contextually relevant text responses.

- LLMs continuously improve their performance with additional data and parameters, enhancing their ability to understand and generate human-like language.

Advantages

- LLMs have a wide range of applications across domains, including content generation, conversation, virtual assistance, sentiment analysis, language translation, and text summarization.

- Their versatility, generalization capabilities, and ability to improve with more data make LLMs highly advantageous for various AI-driven tasks and applications.

Challenges

- Bias and Fairness: LLMs may inadvertently perpetuate biases present in the training data, leading to biased or unfair outcomes, particularly in sensitive applications such as natural language processing (NLP) and decision-making systems.

- Safety and Misuse: LLMs have the potential to generate harmful or misleading content, including misinformation, hate speech, and deepfakes. Ensuring the responsible use of LLMs is essential to mitigate these risks.

- Privacy and Data Security: LLMs trained on sensitive or personal data raise concerns about privacy and data security. Safeguarding user data and implementing robust privacy-preserving measures are critical considerations.

Notable Large Language Models

- GPT (Generative Pre-trained Transformer) Series: Developed by OpenAI, the GPT series includes models such as GPT-2, GPT-3, and subsequent iterations, known for their large-scale, state-of-the-art performance in natural language generation.

- BERT (Bidirectional Encoder Representations from Transformers): Introduced by Google, BERT is a transformer-based model pre-trained on large text corpora and fine-tuned for various NLP tasks, achieving significant improvements in performance.

Conclusion

Large Language Models represent a significant advancement in artificial intelligence, enabling machines to understand and generate human-like text with unprecedented accuracy and fluency. While LLMs hold immense potential for a wide range of applications, addressing ethical considerations, ensuring responsible use, and advancing research in areas such as bias mitigation and interpretability are essential for realizing their full benefits while minimizing risks.

|

PRACTICE QUESTION

Q. Large Language Models represent a significant advancement in artificial intelligence, enabling machines to understand and generate human-like text with unprecedented accuracy and fluency. Examine. (150 words)

|