Description

Source: BusinessStandard

Disclaimer: Copyright infringement not intended.

Context

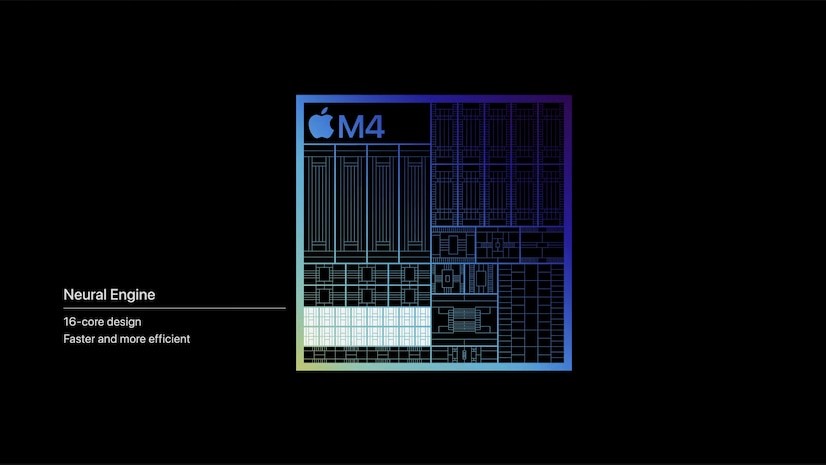

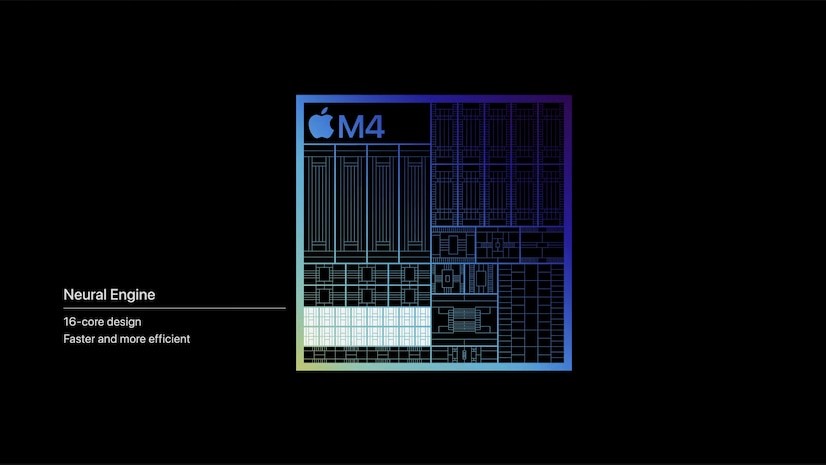

- M4 chip's 16-core Neural Engine represents a significant advancement in Apple's hardware lineup, particularly in terms of AI-related tasks.

Details

What is NPU (Neural Processing Unit)?

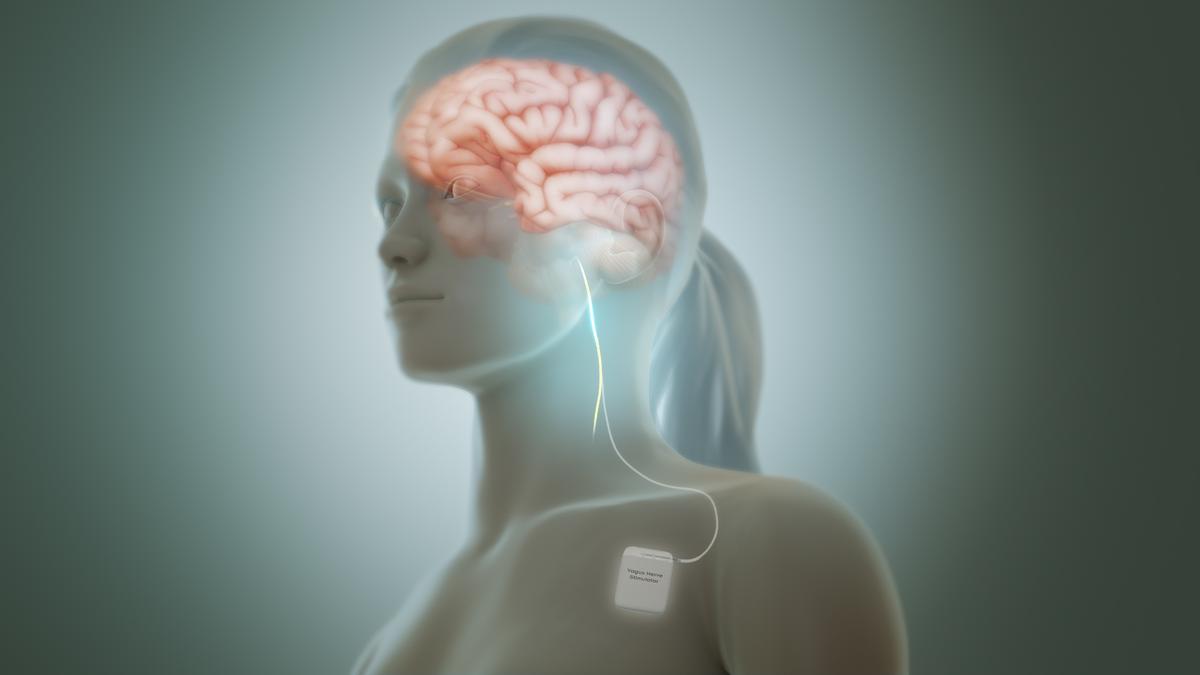

- An NPU, or Neural Processing Unit, is a specialized hardware component designed specifically for accelerating neural network processes.

- Neural networks, inspired by the human brain, are the backbone of many AI-related tasks. NPUs excel at executing the complex computations involved in neural network operations.

- NPUs are optimized for tasks such as speech recognition, natural language processing, image and video processing (e.g., object detection, image segmentation), recommendation systems, and more.

Integration in Consumer Devices:

- System-on-Chip (SoC): In consumer devices like smartphones, laptops, and tablets, NPUs are often integrated within the main processor as part of a SoC configuration.

- By offloading AI-related computations to dedicated NPUs, devices can achieve improved performance and energy efficiency, enabling faster and more responsive AI-driven experiences for users.

Role in Data Centers:

- In data centers and enterprise environments, NPUs may be deployed as discrete processors, separate from CPUs and GPUs.

- Dedicated NPUs in data centers enhance scalability and efficiency for AI workloads, allowing for faster and more cost-effective processing of large-scale neural network models.

Key Features of NPUs:

- Parallel Processing: NPUs leverage parallel processing to execute multiple neural network operations concurrently, accelerating computation.

- Low Precision Arithmetic: Many NPUs support low-precision arithmetic operations (e.g., 8-bit or even lower) to reduce computational complexity and energy consumption.

- High Bandwidth Memory: NPUs are often equipped with high-bandwidth memory to efficiently handle large neural network models and datasets.

- Hardware Acceleration: NPUs employ hardware acceleration techniques, such as systolic array architecture or tensor processing units, to expedite neural network computations.

NPU Applications:

- Image Recognition: NPUs excel in tasks like object detection, classification, and segmentation in images and videos.

- Natural Language Processing (NLP): NPUs are used for tasks such as language translation, sentiment analysis, and text summarization.

- Autonomous Vehicles: NPUs play a crucial role in enabling real-time perception and decision-making capabilities in autonomous vehicles.

- Medical Imaging: NPUs accelerate medical image analysis tasks, aiding in diagnosis, prognosis, and treatment planning.

- IoT and Edge Computing: NPUs are deployed in edge devices and IoT applications to perform inference tasks locally, reducing latency and bandwidth requirements.

Distinctions between NPUs, CPUs, and GPUs

CPU (Central Processing Unit):

- Sequential Computing: CPUs execute instructions sequentially, processing one task at a time.

- Versatility: CPUs are designed for general-purpose computing tasks, suitable for a wide range of applications and workloads.

- Complex Tasks: While CPUs can handle complex computations, their sequential processing nature may not be optimal for highly parallelizable tasks like deep learning inference.

GPU (Graphics Processing Unit):

- Parallel Computing: GPUs excel at parallel computing, featuring thousands of cores optimized for simultaneous execution of tasks.

- Graphics Rendering: Initially developed for rendering graphics in video games and multimedia applications, GPUs are highly efficient at parallel processing but are not specifically designed for AI workloads.

- AI Workloads: While GPUs can handle AI workloads, they may not be as power-efficient or optimized for machine learning tasks compared to dedicated NPUs.

NPU (Neural Processing Unit):

- Dedicated for AI: NPUs are purpose-built for accelerating neural network computations, leveraging parallel processing specifically tailored for machine learning operations.

- Efficiency: NPUs are optimized for power efficiency and performance in AI workloads, offering faster processing and lower energy consumption compared to CPUs and GPUs.

- Specialized Circuits: NPUs incorporate specialized circuits and architectures designed to execute neural network operations efficiently, making them well-suited for tasks like image recognition, natural language processing, and more.

Key Differences:

- Parallelism: While CPUs mainly use sequential computing, NPUs and GPUs leverage parallel computing for faster and more efficient processing, with NPUs being specifically optimized for AI tasks.

- Specialization: NPUs are dedicated to AI workloads, whereas GPUs serve multiple purposes, including graphics rendering and AI tasks alongside other computations.

- Efficiency: NPUs are designed to maximize performance while minimizing power consumption, making them ideal for battery-powered devices and data center environments where energy efficiency is crucial.

On-Device AI and the Role of NPUs

- Traditionally, large language models like GPT-3 were too massive to run efficiently on consumer devices due to computational and memory constraints. As a result, these models were typically deployed in the cloud, requiring network connectivity for inference.

- With the introduction of smaller and more efficient language models such as Google's Gemma, Microsoft's Phi-3, and Apple's OpenELM, there's a growing trend towards on-device AI. These compact models are designed to operate entirely on the user's device, eliminating the need for constant network access and addressing privacy concerns associated with cloud-based processing.

- As on-device AI models become more prevalent, the role of NPUs becomes even more critical.

- NPUs are specifically optimized for executing AI workloads efficiently, making them ideal for powering on-device AI applications.

- By leveraging NPUs, devices can provide responsive and seamless AI experiences without relying on cloud processing, enhancing user privacy and overall performance.

Sources:

BusinessStandard

|

PRACTICE QUESTION

Q. While CPUs, GPUs, and NPUs all contribute to computing capabilities, NPUs stand out for their specialization in accelerating AI workloads, offering superior efficiency and performance for tasks like deep learning inference. Comment. (250 words)

|

Array

(

[0] => daily-current-affairs/neural-processing-unit

[1] => neural-processing-unit

)